· 18 min read

Using Metaphone to handle bad transcriptions in voice search for sommeliers

I recently prototyped a hands-free inventory counting system for sommeliers at a popular Manhattan Italian restaurant with Gabriel, my partner at Baxter.

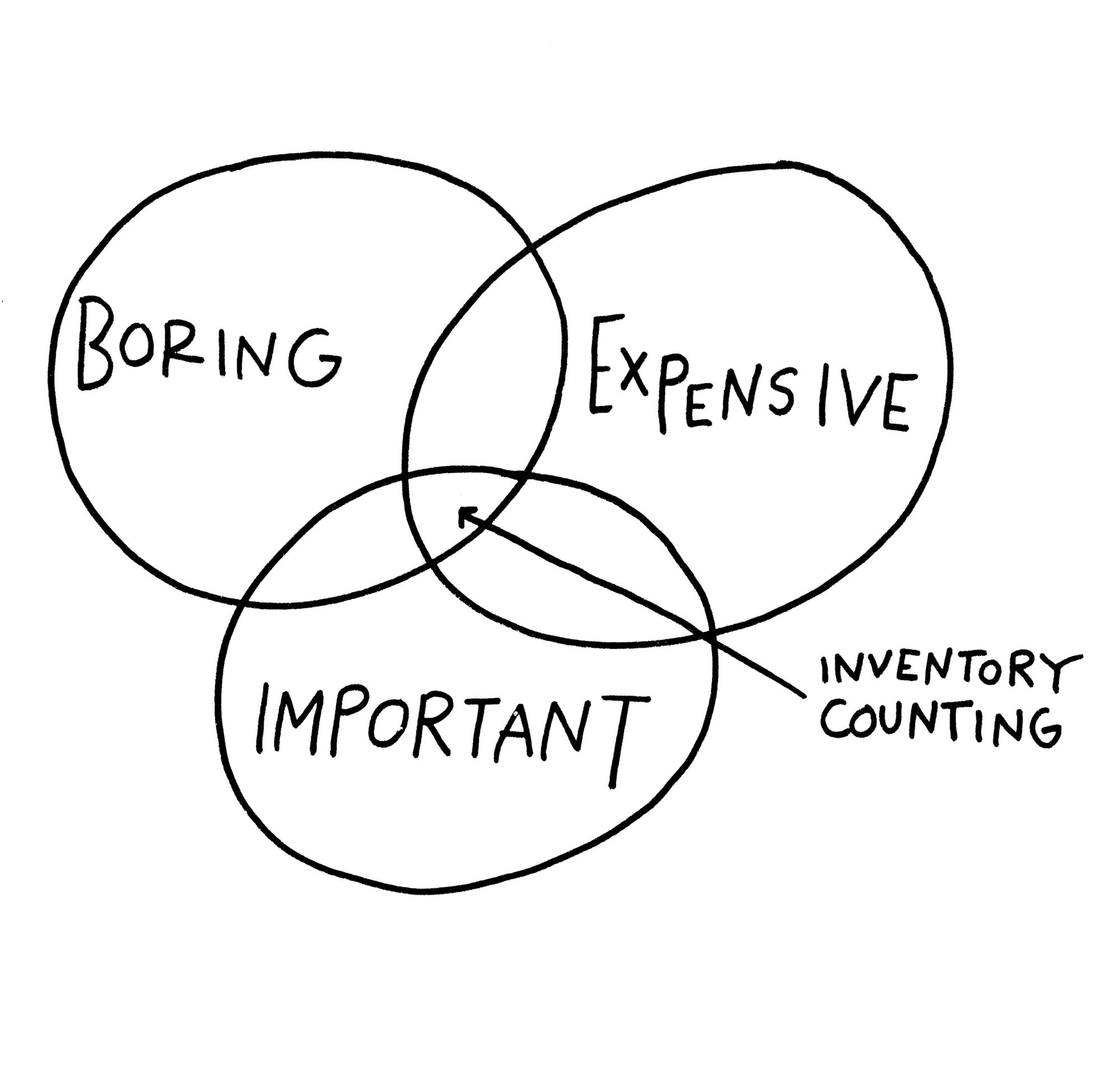

I know the general manager (GM) at this restaurant – henceforth referred to as Ristorante – personally and he hates inventory counts. Most restaurant GMs do. They’re labor-intensive yet extremely important: regular inventory audits are critical for restaurants to control their margins.

Ristorante can clear well into 5 figures of revenue in a day. And its GM, being a skilled and responsible operator, requires his team to do an inventory audit every month. So Ristorante’s two sommeliers arrive early in the morning on the 1st of every month to count over 1,500 different wines stored across 3 bars, a cellar, a fridge, and a storage room. It takes them over 6 hours, and the task bleeds into daily operations.

It’s painful.

It’s also just the kind of messy real-world problem that Gabriel and I want to try to solve for a market: is there any way we could cut the time it took these sommeliers to count inventory by half?

I popped into Ristorante on April 1st, a busy Saturday, to observe an inventory count.

Ristorante’s wine storage is organized but cramped. At the bars, many wine bottles sit on high shelves and sommeliers might be standing precariously on a stool or counter. Their hands are often not available – they’re moving boxes, bottles, or holding a bottle up so they can identify what it is.

Counting wine looks something like this:

- Identify the box/bin or a bottle of wine you want to count. Count it.

- With a laptop or phone next to you, look up the wine you’re looking for in Bevager, Ristorante’s inventory management system.

- Enter the count and save it.

Each wine takes anywhere from 15-60 seconds, of which 80% of the time goes to step 2: looking up the wine in Bevager. This is due to a combination of the following:

- Context switching: from using one’s arms and being perched somewhere, to using a laptop or phone.

- Wine names are entered into Bevager inconsistently: in some cases they use the wine name, in others they use the producer. These names might be on the front or back of the wine.

- Words in wine names are frequently repeated: “chateau”, for example.

- Vintages: you might need to pick the right vintage from 5+ entries.

- Bevager bugginess/search quality: Bevager is a fine system, but its inventory audit feature could use some love.

These are all solvable problems that take up a significant percentage of the time it takes to inventory one wine bottle.

So we decided to start by helping a sommelier retrieve a wine in Ristorante’s inventory system quickly and accurately without having to use her hands, with her voice.

Prototyping voice search for wines in 3 days

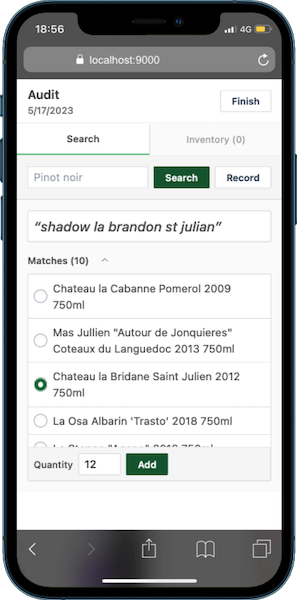

A critical component of our prototype was a voice search feature that allowed a sommelier to say the name of a wine and retrieve it correctly from over 1,500 SKUs in her inventory.

There are a bunch of obstacles to getting this kind of voice search to work well.

- Getting accurate transcriptions, even with state of the art models, is hard. We have to deal with French and Italian wines, various accents, and restaurant background noise.

- Inaccurate transcriptions are different from typos and misspellings. Handling them requires a different set of tricks.

- The user’s not at a keyboard. Auto-complete is an incredible feedback mechanism, but we don’t have access to it.

- There is exactly one relevant record. A sommelier’s search for the wine they’re looking for has only one matching record. It must be a top result, otherwise the search failed.

Perhaps the closest analog to this search use case is when you ask your phone to call a contact (e.g. “Google, call Katie.”). It turns out that this is pretty challenging!

Our goal was to validate feasibility and demand for a solution, so we needed to show something working well enough quickly.

I also wanted this to be easy to understand and operate: I didn’t want to have to (re-)learn, configure, and tune Solr, ElasticSearch, or Typesense. Besides, I only had about 1,500 records and these tools aren’t really designed to deal with the search use case I describe above, out of the box.

I managed to cobble together workable voice search in about 3 days using off-the-shelf components, a bag of information retrieval tricks, ~300 lines of Python, and a bunch of trial and error. It’s imperfect but it’s quick to implement, simple to operate, and it works with many classes of transcription errors with plenty of avenues for improvement.

- Code: The codebase is on Github.

- Demo: You can give it a try on Streamlit Cloud.

- Is this available? What happened next? Can you make this for me? I answer these questions in the Appendix.

Here’s how it works!

A summary of my approach

I use OpenAI’s Whisper for audio transcription and DuckDB to store and query my search index.

DuckDB’s full text search (FTS) extension easily handles the happy path: accurate transcriptions. The FTS extension is easy to use, but it does not handle misspellings or, in my case, poorly-transcribed audio.

OpenAI’s Whisper model is impressive, but:

- There is background noise in restaurants.

- Non-native speakers pronounce French and Italian wines poorly.

- Both of these issues affect audio clarity and, in turn, transcription quality.

- Even without these issues, Whisper is not perfect.

So my implementation needs to handle inaccurate transcriptions.

While there’s plenty of prior art on handling misspellings in search, the same techniques don’t work as well with bad audio transcriptions. This is because inaccurate transcriptions don’t look like misspelled words. They often look like gibberish – made up words, or strings of words that sound like the audio but don’t make sense together.

So I built two of my own search indexes in DuckDB to handle “mistranscriptions” using Metaphone, a phonetic algorithm that maps similar-sounding words to the same pronunciation.

In total, I have 3 indexes: 1 full text search index on wine names, and 2 that use Metaphones, which I’ll cover in greater detail below

At query time I do the following:

- Query the indexes in 5 different ways. Each method excels at different kinds of queries or transcription errors. I get 10 results from each method, increasing the likelihood that the wine the user is looking for is in my result set.

- Rank all those results using text similarity. I get the Jaro-Winkler similarity score between each result and the transcription. This tends to bubble the result up to the top 5.

- Return the top 5 ranked results. I’d say 80%+ of the time the result I was looking for ended up in the top 5, and it fared well with “adversarial” audio (comically bad accents, yelling in the background, etc.)

What do inaccurate transcriptions look like?

Inaccurate transcriptions are usually close to how the words actually sound.

Let’s say we have a wine in our inventory called Chateau Champignon.

Here are some potential transcriptions of “chateau”:

- shadow

- shatto

- chat oh

Here are some potential transcriptions of “champignon”:

- champagne on

- shomp inyon

- champ onion

- sham pig non

We may end up with any permutation of transcriptions for “chateau” and “champignon”. For example:

- shadow champagne on

- chat oh shomp inyon

- shatto sham pig non

Traditional search techniques to handle misspellings fail spectacularly on these kinds of errors. None of them look like misspellings or typos.

But they do sound the same, and this is where phonetic algorithms come in.

You can map inaccurate transcriptions to the same string using phonetic algorithms

Phonetic algorithms map words to pronunciations.

Different phonetic algorithms have different goals in mind, but there is a whole class of them that is designed to map similar-sounding words to the same pronunciation.

The earliest one of these is called Soundex, which was developed in the late 1800’s and patented in 1920, well before the advent of computing!

Why? To map similar-sounding surnames to each other for the US Census. The history here is really neat, and you can read this post by Luke Otwell to learn more if you’re interested.

In my implementation I used Metaphone, a successor to Soundex, for which there is an open-source Python implementation in pyphonetics.

Metaphone maps chateau champignon to XTXMPNN.

Here’s how Metaphone maps our transcriptions of Chateau Champignon above:

chateau

shadow:XTchat oh:XTshatto:XT

champignon

champagne on:XMPNNshomp inyon:XMPNYNchamp onion:XMPNNsham pig non:XMPNN

various permutations of the above

shadow champagne on:XTXMPNNchat oh shomp inyon:XTXMPNYNshatto sham pig non:XTXMPNN

Almost all of these map directly to the same Metaphone as Chateau Champignon!

To put this insight to work, our code creates two additional indexes: the Exact Metaphone Index and the Metaphone Token Index. These indexes are constructed in index.py.

The two Metaphone-based indexes

Assume we have three wines in our inventory:

- Chateau Champignon

- Cavalleri Collezione Esclusiva Chardonnay

- Chateau la Bridane Saint Julien

Here’s how we construct our two Metaphone indexes on those wines.

1. The Exact Metaphone Index

In this index we map the Metaphone for each wine’s full name to itself:

XTXMPNN→Chateau ChampignonKFLRKLSNSKLSFXRTN→Cavalleri Collezione Esclusiva ChardonnayXTLBRTNSNTJLN→Chateau la Bridane Saint Julien

This index is just a 1-to-1 mapping. It allows us to reverse look up a metaphone to a wine’s full name as it exists in our index.

2. The Metaphone Token Index

In this index we tokenize each wine name, get the Metaphone for each token, and construct an index on those tokens.

Here’s what the first step looks like:

Chateau Champignon→XT XMPNNCavaelleri Collezione Esclusiva Chardonnay→KFLR KLSN ESKLSF XRTNChateau la Bridane Saint Julien→XT L BRTN SNT JLN

Let’s call these “Metaphone strings”. Each token in the string is the Metaphone for a token in the original wine name. These strings are what we index.

Assuming that 1, 2, 3 are IDs that map to the wines in the list above, the Metaphone Token Index looks like this:

XT→[1, 3]XMPNN→[1]KFLR→[2]KLSN→[2]- … and so on.

Conveniently, this is just a regular full text search index on the Metaphone strings above. So we can use DuckDB’s full-text search extension again, saving the work to write code to construct or query the index.

This index allows us to reverse look up Metaphones for individual words in our transcript.

For example, a speaker might say only “champignon”, for which we get an inaccurate transcription like “shampinon”. Or a speaker might say 3 words in a wine name, but two of them are transcribed entirely incorrectly while the third has a Metaphone match.

This index gives us the means to salvage an inaccurate transcript as long as there’s a Metaphone match in it, somewhere.

Five query-time approaches

Combined, the following approaches are greater than the sum of their parts. Individually, they excel at dealing with different kinds of queries and transcription errors.

Each of the query methods is implemented in search.py.

Let’s add one more wine to our index for the following explanations. There are now 4 wines in our inventory:

- Chateau Champignon

- Cavalleri Collezione Esclusiva Chardonnay

- Chateau la Bridane Saint Julien

- Guy Breton Morgon

Here are the five query approaches we use.

1. DuckDB Full-Text Query

This was the first one I implemented, and it uses DuckDB’s full-text search extension.

Under the hood, DuckDB’s FTS extension implements BM-25, a widely-used ranking function.

It constructs the index with some basic defaults for English: a list of 571 stopwords, the Porter stemmer, lowercasing all text, ignoring all non-alphabetic lowercase characters, and stripping accents.

Pros

- BM-25’s ranking algorithm performs well: a query for “chateau champignon” will rank Chateau Champignon first, followed by the other hundred that include “chateau”.

- It works well as long as the most unique words of the wine name are transcribed accurately. For example, “shadow champignon” will likely return “Chateau Champignon”, but “chateau shampinyon” is a crapshoot because there are hundreds of wines with “chateau” in their name.

Cons

- It fails spectacularly on inaccurate transcriptions, usually returning zero or irrelevant results.

2. Exact Metaphone Query

Here, we do a reverse lookup for the transcription’s Metaphone in the Exact Metaphone Index I describe above:

- Get transcription metaphone: “shadow champagne on” yields

XTXMPNN - Reverse-lookup:

XTXMPNNyields the record for Chateau Champignon.

Pros

- Works with inaccurate transcriptions.

- High precision: it tends to match the exact wine the user is searching for, and nothing else.

Cons

- The user must say the entire wine as it exists in our index. If they just say “champignon” instead of “chateau champignon”, we are out of luck.

- Fails when a transcription’s Metaphone does not match. An example is the Metaphone for “shadow shomp inyon”:

XTXMPNYN, which has a “Y”.

3. Metaphone Token Query

Here, we first form our query. Let’s say the speaker is retrieving Chateau la Bridane Saint Julien.

They say only “julien bridane”, but they incorrectly pronounce each word. It is transcribed as "julian breedan".

- Tokenize the transcript:

"julian breedan"becomes["julian", "breedan"] - Get the Metaphone for each token:

["julian, "breedan"]becomes["JLN", "BRTN"] - Join the Metaphones:

["JLN", "BRTN"]becomes"JLN BRTN"

Then we query the Metaphone Token Index described above with our query.

Because our query contains "JLN" and "BRTN", the wine Chateau la Bridane Saint Julien will rank highly. Its tokens map to: ["XT", "L", "BRTN", "SNT", "JLN"].

Guy Breton Morgon will also rank. Its tokens map to ["K", "BRTN", "MRKN"].

Recall that we built this index using DuckDB’s full-text search extension, so we query it with the extension too.

Pros

- Works with inaccurate transcriptions.

- Works when speakers partially say the name of a wine.

- Works when the order of the words spoken do not match the wine’s record.

Cons

- This approach sometimes yields many irrelevant results that have similar-sounding words in their names.

- Fails when a transcription’s Metaphones do not match.

4. “Similar Token Metaphones” Query

Methods 1 to 3 handle:

- Accurate transcriptions

- Inaccurate transcriptions with matching Metaphones

This takes us far! But I would still get transcriptions without any matching Metaphones and I wanted to handle those.

I attempted a number of approaches, all using Levenshtein edit distance in creative ways on increasingly worse transcriptions. All of them worked.

The catch? They also introduced a lot of noise: the worse a transcription, the more permissive I had to be, which meant that my result set would also include lots of wines that looked and sounded nothing like the wine I was retrieving.

In information retrieval parlance, my search recallsuffered: I was retrieving an ever-higher percentage of irrelevant records.

I had a prototype to ship, so I settled with a basic method that:

- Didn’t return a torrent of random records.

- Could handle one extra, absent, or incorrect consonant that caused a token to generate a different Metaphone. For example, “bribane” maps to BRBN, while “bridane” – a token in our index – maps to BRTN.

Here’s how it works. Let’s say the speaker is retrieving Chateau la Bridane Saint Julien (Metaphones: XT L BRTN SNT JLN*).*

They say “bridane saint julien”, but we get a really bad transcription: "brenton sane julia", whose tokens map to ["**BRNTN**", "**SN**", "**JL**"].

We’re out of luck here with methods 1 to 3. Each will return zero results because we have no matching tokens nor matching Metaphones.

A “Similar Token Metaphone” query does the following:

- Tokenize the transcription:

"brenton sane julia"turns into["brenton", "sane", "julia"]. - Get all tokens in our index that are exactly 1 edit distance away from each token in our query: for

"brenton"this is["breton"], for"sane"this is[](an empty set), for"julia"this is[](an empty set). So we get["breton"]. - Join all tokens from step 2:

["breton"]becomes"breton". If we had more than one token, we’d join all of them with a space. - Use the string from step 3 to run a Metaphone Token Query. Our input to the query method described above is

"breton".

Because the Metaphone for "breton" is BRTN we get two records, one of which is our target wine:

- Chateau la Bridane Saint Julien (Metaphones:

XT L BRTN SNT JLN) - Guy Breton Morgon (Metaphones:

K BRTN MRKN)

Using a Metaphone Token Query in Step 4 instead of a DuckDB Full-Text Query casts a slightly wider net, which increases the likelihood that we catch the result we want when all our other query methods have failed.

In my limited observation, it worked spookily well without returning lots of irrelevant results. It is a total delight to read an awful transcription like “brenton sane julia” after you speak yet have it retrieve the record you’re looking for.

Pros

- Might succeed even when there are no matching Metaphones in a transcription.

Cons

- Might return a lot of irrelevant results.

5. “Metaphone Substring” Query

I added this final query approach to handle exactly one class of transcription error: transcriptions that break one word into multiple words. This happened fairly frequently.

Let’s say the speaker is retrieving Chateau Champignon, they say “champignon”, and the transcription we receive is "champ onion".

Query approaches 1 through 4 all fail:

- There is no wine that matches the tokens

"champ"or"onion". - The Exact Metaphone for

"champ onion"isXMPNN, for which there is no match in the Exact Metaphone Index. - The Metaphone Tokens for

"champ onion"areXMPandONN, for which there are no matches in the Metaphone Token Index. - There are no tokens 1 edit distance away from

"champ"and"onion"

It turns out that many of these cases have Exact Metaphones that are substrings of their target wine. In this case:

- The Exact Metaphone for

"champ onion"isXMPNN. - The Exact Metaphone for

"chateau champignon"isXT XMPNN.

… so a substring search in our Exact Metaphone Index succeeds where all our other query methods have failed.

Pros

- It’s simple.

- Handles a transcription error that happens frequently.

- Doesn’t return a lot of irrelevant results.

Cons

- Doesn’t help with anything other than this one class of error.

- Doesn’t always work: “shomp inyon” maps to “XMPNYN”.

Ranking results from all five approaches using Jaro-Winkler similarity

When we execute all five of our query approaches:

- We don’t know which approach, if any, has the result the speaker is looking for.

- Each approach might return multiple results.

So how do we surface the target wine from all these results?

It’s safe to assume that the transcript we receive is similar, by some measure, to the speaker’s target wine. If it’s not, then the transcript is probably too inaccurate – would a human be able to figure out which wine the sommelier is referring to?

The challenge here is determining an objective measure that consistently brings the target wine into our top 5 results. Doing this well requires data from real users and time to experiment, neither of which we had.

Aiming for a quick solution, I tried using text similarity using whatever was available off-the-shelf using DuckDB.

It turns out that Jaro-Winkler similarity works well enough for our purposes.

My implementation:

- Gets up to 10 results from every query approach.

- De-duplicates the set of results, because the same result might appear from multiple approaches.

- Calculates Jaro-Winkler similarity between each result and the transcription.

- Returns the top 5 ranked results.

This is a workable implementation, but if you play around with the demo you’ll observe it fail in basic ways, like ranking the target wine 5th when it seems implausible that the 1st, 2nd, 3rd, or 4th results are correct.

The problem with using text similarity is that we’re discarding everything we know about our query approaches and the kinds of results they return. For example, if we get results from a DuckDB full-text search, it means the transcript contains correctly-spelled tokens in our search index, which increases the probability that we have an accurate transcript. That’s information we could use to rank our search results!

How can this be improved?

With lots of experimentation! It helps enormously to have data from real users searching for wines.

In a real setting, I’d want to:

- Know what percentages of searches are successful over time.

- Have a set of (transcript, target wine) pairs that I can test against.

- Run A/B tests pitting search algorithms against each other.

Alas, we did not promote this prototype to production.

If I spent more time on this voice search implementation, here are some of the things I might try.

Ranking results: scores, weights, and phonetic algorithms

- Weight results from query approaches differently.

- Come up with a way to score results phonetically.

- Give higher scores to results if they surface in multiple approaches.

- Use different, or multiple phonetic algorithms for the task.

Using LLMs

- Use an LLM to surface the likeliest wines. I tried this briefly and couldn’t get it to work well, but it has promise.

- Use an LLM to generate plausible mis-transcriptions of every wine. (GPT-4 generates great, plausible transcriptions). Index all of these.

- Use the mis-transcriptions from the above to fine-tune an LLM to do this task.

Appendix

Is this product live? If not, will it be?

No, and probably not. Everyone hates inventory counts, but this was not a pressing enough problem for the GM at Ristorante or the 10 wine stores, meat/cheese shops, and restaurants I spoke with.

In the time following the week we spent building our initial prototype, we did not gather enough evidence for us to continue working on this product.

Can you build this, or something else, for us?

Yes.

I co-own Baxter, a product development and contracting company, with Gabriel Garrido. We’re a two-person team of seasoned engineers and we’ve built software and teams at numerous early stage startups.

Thanks to Gabriel Garrido, Phil Eaton, Pierre Jambet, Andy O’Neill, Chuck Groom, and Aaron Rosen.