· 6 min read

Why you should regularly and systematically evaluate your LLM results

Evaluating results from LLM pipelines is time-consuming and often I’d rather poke my eye with stick instead of looking at another 100 results to decide if they are “good”.

But I do it anyway and I cannot understate how beneficial it is.

Here’s why you should do it:

- You’ll know when your changes are working.

- You’ll improve your LLM pipelines faster.

- You’ll build a fine-tuning dataset along the way.

- You’ll build better intuition for what does and doesn’t work well with LLMs.

These benefits compound if you evaluate LLM results regularly and systematically.

By regularly, I mean every time you make a change to your LLM pipeline. A change could be a new prompt, chunking strategy, model configuration, etc.

By systematically I mean that you carefully examine your results, capture how good or bad they are, and why.

If you are not already doing this, picture me grabbing you by the shoulders and screaming “WHY NOT?!!”

Then read the rest of this post, which is a more constructive attempt at persuading you to evaluate your LLM responses.

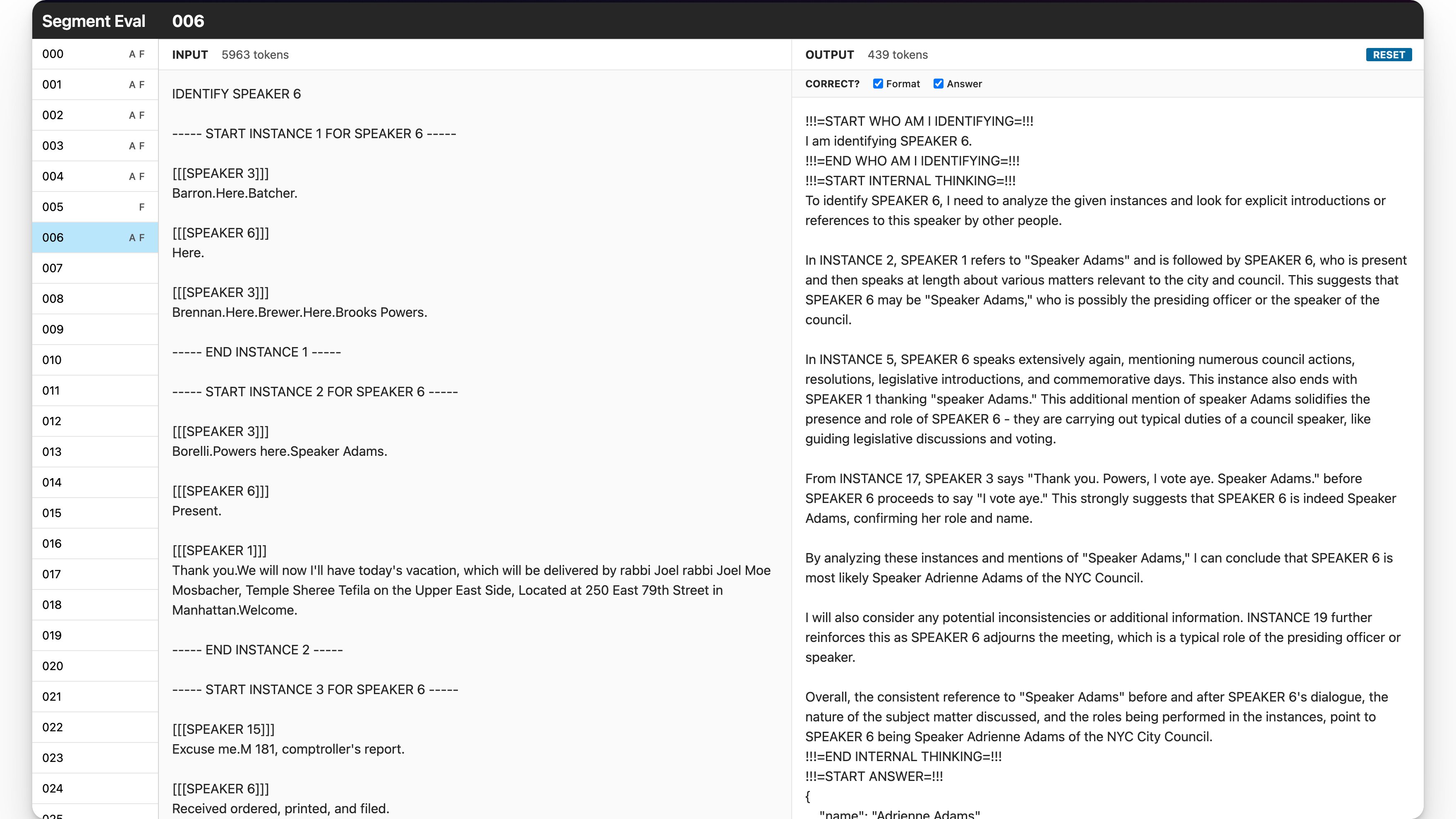

In the course of building the city council meeting tool described here, I spent many days trying to get gpt-4-turbo to accurately identify speakers in 4-hour meeting transcripts with upwards of 50 speakers.

I started with a simple approach: feed the entire transcript into gpt-4-turbo with a prompt. The problem is that gpt-4-turbo performs tasks quite poorly on long context chunks (~40K - 100K tokens). I talk briefly about the issue in this post.

So I pursued a new strategy: slice my transcript into manageable chunks and try identifying speakers in each chunk instead.

I spent a day or two feeling around in the dark for the right chunking strategy and prompt without getting great results. I eventually asked Gabriel (a partner I work with from time to time) if he’d be willing to cobble together a UI to help me evaluate my results quickly.

It took a thirty minute conversation, an afternoon of his time, and it was a complete game-changer for me.

I improved the performance of my approach dramatically over the course of a couple of days: my speaker identification accuracy went from 35-50% on Tuesday to 80-90% on Thursday.

To get to that point, I evaluated hundreds of responses and changed my prompt and chunking strategy to address specific classes of problems I observed.

Here are some problems I observed and changes I made to address them that improved speaker identification accuracy:

- The LLM responses would heavily weight self-introductions, like “I’m council member Brooks-Powers.”, and fail to draw conclusions from sentences like “I’d like to pass the mic to council member Restler.”

- Solution: I added directions and examples showing how to identify a speaker based on neighboring context.

- Mistranscriptions would cause issues: “Jose” instead of “Ossé”, “Adrian” instead of “Adrienne”.

- Solution: I provided a list of council member names and added directions to infer mistranscriptions. The LLM starting figuring out when a name was a likely mistranscription and got many more inferences correct.

- (If you’re interested in learning about other ways to handle mistranscriptions, I wrote this post about a voice search use case involving an Italian restaurant and sommeliers.)

- Because roll calls happen so fast, speaker diarization (identifying distinct speakers in audio and giving them a label) fails badly. As such, it is not reliable to infer a speaker’s identity based on them saying “Present” in a roll call. (The image showing the eval tool above shows an example of this problem.)

- Solution: I added examples to deter the LLM from doing this in my prompt.

- In a few cases, the LLM hallucinated a council member’s district and got the wrong answer: “Adams is part of District X but the speaker said District Y, so I must infer that it is not Adams.”, when actually it was Adams.

- Solution: I added the district and borough to my council member list.

- I noted that my chunks didn’t always have the context required to identify a speaker.

- Solution: I modified my chunking strategy until they did.

I did this over and over again for many classes of errors, evaluating hundreds of responses until I consistently managed to get an 80-90% accuracy rate.

These changes were all easy to make, but I didn’t know to make them until I systematically reviewed my inputs and outputs.Once I did, getting better performance from my LLM pipeline took very little time.

As a bonus, I’ve stashed away every evaluated response so that when it makes sense for me to fine-tune a model for my task, I already have the dataset to do it.

The last thing that evaluating my LLM results systematically has helped me with: I’ve built useful intuition around what a good prompt needs to have and what improvements are worth making now vs. later.

Today, my initial attempts at prompting LLMs work more effectively and my improvements are swift and significant. I know that a good, diverse set of few-shot examples is the highest leverage way to improve a prompt so I race to get good examples, fast.

My guesses around how I should I should chunk lengthy passages are more accurate, and I have a sense for how big those chunks should be (4-6K tokens is an anecdotal sweet spot, but up to 10K can work well in many cases).

I’ve picked up tricks and techniques that I use constantly, like this one about making up your own markup language to prevent hallucinations, and using chain-of-thought not only to get better answers, but to observe why the LLM did what it did so I know how it screwed up or, occasionally, how it made a surprising and accurate association.

All this is to say: if the performance of your LLM results matters for your use case, you should be systematically evaluating them. And I mean you, specifically: the person implementing the feature that relies on LLM output.

Scaling requires you to outsource this work, and you might need to eventually.

But you can get very far doing this work on your own, you will learn a ton, and you will gain important context and control over the quality of your LLM-powered feature by doing it yourself.